Wheels Within Wheels

Wheels within Wheels was a real-time 64k demo written in collaboration with Simon Kallweit and Vitor Bosshard in early 2012. It was submitted to Demodays 2012 (a competition for demos), where it won 1st place in its category. Most of the development was done in our free time over a period of 6 months, next to studying at ETH.

64k demos are an interesting challenge. The purpose of this type of program is to generate an entertaining and engaging music video in real-time, with the restriction that the entire program and all of its data have to fit into a single 64kb executable file. To illustrate how small this is, a single uncompressed frame of the video is already more than 50 times larger than the program that generated it. Similarly, one second of uncompressed audio is twice as large as the entire program.

It is clear that ordinary approaches to programming don't work here - for example, it is not possible to have a composer make an audio track and then play back the audio file at runtime, since the file would exceed the size restrictions by several magnitudes. Instead, all of the sound must be synthesized at runtime; that is, a fully real-time software synthesizer fitting into 64kb is required, which then has to be programmed by an artist to generate a good sounding audio track. Similarly, storing complex meshes or textures is almost impossible to do within 64kb, so all geometry and textures have to be generated procedurally at runtime, in real-time.

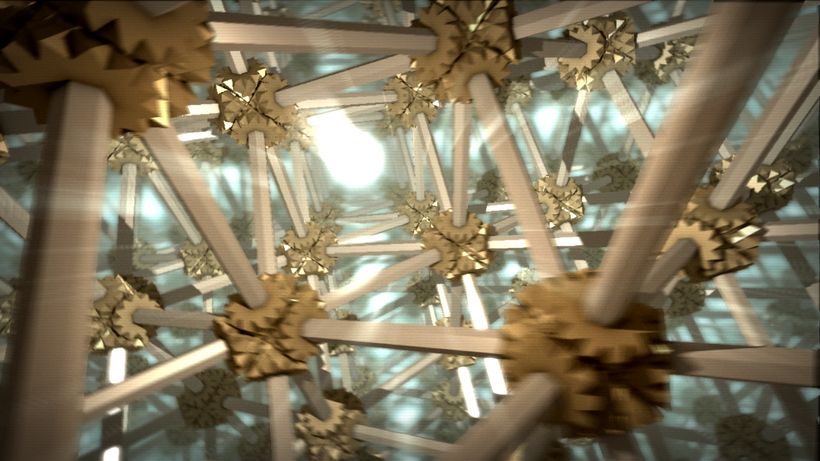

My job on the team was to create a rendering pipeline that could unify all of the different procedural content and render it in real-time, combined with a stack of post processing effects that allowed any shot to look great. It was also my task to expose artistic controls from the rendering pipeline to the rest of the team, so that visual effects could be tweaked for each shot and synchronized with the music. I also contributed some of the procedural content seen in the video, mostly things consisting of gears, cogs and infinite zoom.

Completing this task meant an extensive journey into real-time GPU programming as well as data compression. Ultimately I implemented a deferred renderer with a post-processing stack including motion blur, depth of field, god rays, bloom, chromatic aberration, vignetting, scanlines, distortion and color correction. I also researched interesting looking gear mechanisms on different surfaces such as spheres and wrote shaders that could generate such content procedurally within the pixel shader. Finally, I had to tweak and compress all shaders to fall within the heavy size restrictions.