GPU Fluid

This GPU implementation of a high-quality, offline fluid solver was written in Summer 2013 as a personal exercise in GPGPU. My main goal here was to become more familiar with versatile GPU algorithms such as stream compaction, and at the same time apply them in an exciting, computation heavy setting. The solver is written in C++ and OpenGL 4. The project is available on github.

The fluid simulation itself is implemented using a grid-based Eulerian solver. One of the core ingredients of a Eulerian solver is the pressure solve, which involves computing the solution to a large system of linear equations (more than 1 million variables are typical). Simple solvers such as Gauss-Seidel are not very useful here, and I opted to implement the Preconditioned Conjugate Gradient method (PCG) instead.

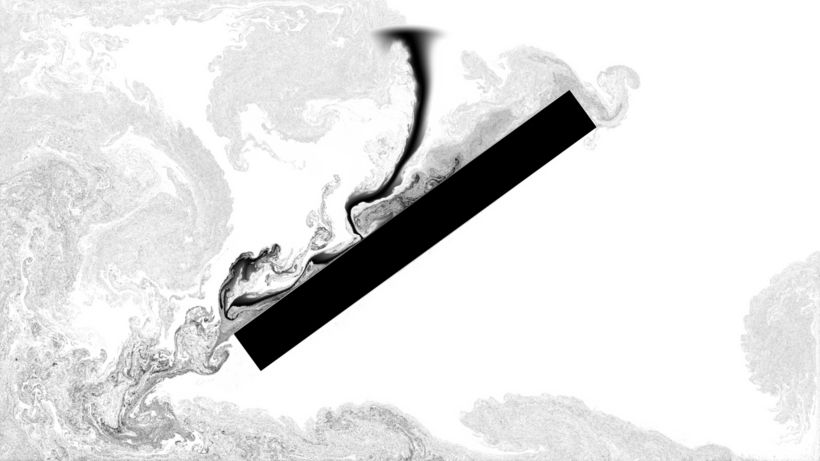

Heavy, black liquid is dispersed onto an obstacle in a container of light, white liquid

Accelerating dot products

Implementing PCG on the GPU can be challenging due to the fact that it requires several dot products per iteration. Dot products are difficult to parallelize efficiently over several hundred cores, since they require summing a large vector into a single scalar. On the GPU, this operation easily becomes the bottleneck of the solver, and I spent a lot of time just optimizing this step.

Firstly, the vector sum is performed in 2D instead of 1D, and stepwise instead of all at once. The input vectors for the dot product are multiplied element-wise and the result stored in a 2D texture, which is then summed to a single scalar step by step. In each step, every shader core computes the sum of a 4x4 texel area, shrinking the texture by a factor of 4 per side at each step, eventually arriving at a 1x1 area (a scalar). This provides a compromise between the number of synchronization steps and the number of cores utilized per sum.

As an additional trick, the texturing unit is used to decrease the number of texture reads. GPUs feature several highly optimized hardware texturing units that can be abused to perform efficient floating point sums. That is, an interpolated texture read placed precisely in the center between four texels will produce the sum of the four texels, divided by four, in a single texture read. This is much more efficient than reading four texels in the shader without interpolation (using texelFetch) and adding them manually. Also, multiple texture reads are performed by each shader core to overlap their latency.

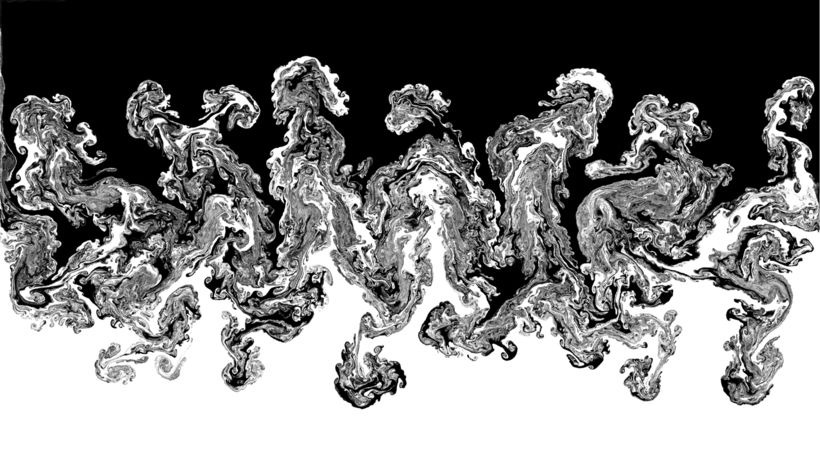

Hot, black smoke rises in a cold container

Finding a preconditioner

The performance of PCG is heavily dependent on the preconditioner, which is a special type of matrix tailored specifically to the class of matrix being inverted (here, a Poisson problem). For CPU implementations, a popular choice is Modified Incomplete Cholesky, which performs excellently in many cases. Unfortunately, it is also inherently sequential, making it infeasible for GPU implementations.

I did some research and discovered the paper Parallelized Incomplete Poisson Preconditioner in Cloth Simulation by Sideris et. al., which describes the Incomplete Poisson preconditioner, tailored towards parallel solvers for Poisson problems. It translates to the GPU without issues and does not perform much worse than Modified Incomplete Cholesky.

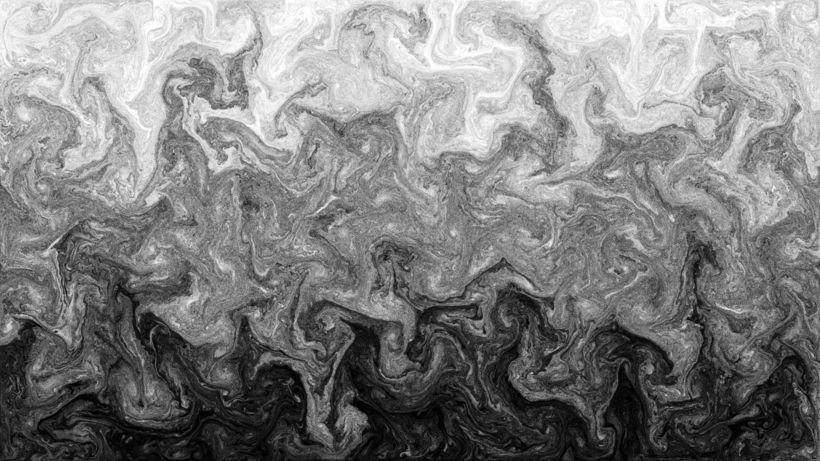

Light, white fluid and heavy, black fluid flow past each other, creating the Kelvin-Helmholtz instability

Managing Particles

Finally, performing accurate advection on the GPU poses additional challenges. Semi-lagrangian approaches are easy to implement on the GPU, but suffer from numerical diffusion; that is, the simulation loses small-scale detail over time and eventually becomes "smoothed out". This is undesirable, since much of the visual fidelity is dependent on the small-scale vortices and other details.

A popular choice of advection method that mostly avoids numerical diffusion is Fluid Implicit Particle (FLIP). On the CPU, this technique is not too difficult to implement. On the GPU however, things are a little bit more complex.

The main problem is that a) lists of particles need to be built for every grid cell and b) possibly many particles need to be created and deleted per frame. Building lists of things and dynamically deleting/creating data points stored in a continuous buffer without the data ever leaving the GPU is a difficult thing to do, and this is where a technique called Histopyramids comes in handy.

Generally speaking, histopyramids allow collecting possibly sparse data points into a packed buffer using prefix sums, in order to evenly distribute work from this buffer to GPU cores. In this case, I am using histopyramids as a simple memory allocator that creates, deletes and sorts particles into lists in a single step. Doing this efficiently requires access to atomic memory operations, which are available as of OpenGL 3.2.